Removing child sexual exploitation and abuse materials: call to action

Follow VerifyMy on LinkedIn to keep up to date with the latest safety tech and regulatory developments.

The clue is in the name - the ‘World-Wide Web’ is a truly global entity, so it is both natural and welcome that the United Nations is showing an increased interest in preventing harm and conflict online, just as it has in the real world since 1945.

At the end of June, the United Nations Office on Drugs and Crime, in partnership with the UK Government, hosted an expert group meeting in Vienna on preventing Child Sexual Abuse Material (CSAM). The objectives of the meeting were to discuss some of the gaps and limitations in current approaches to the problem and to formulate new ways to accomplish the twin goals of removal of CSAM images online, linked to a comprehensive strategy to prevent the re-upload of known CSAM.

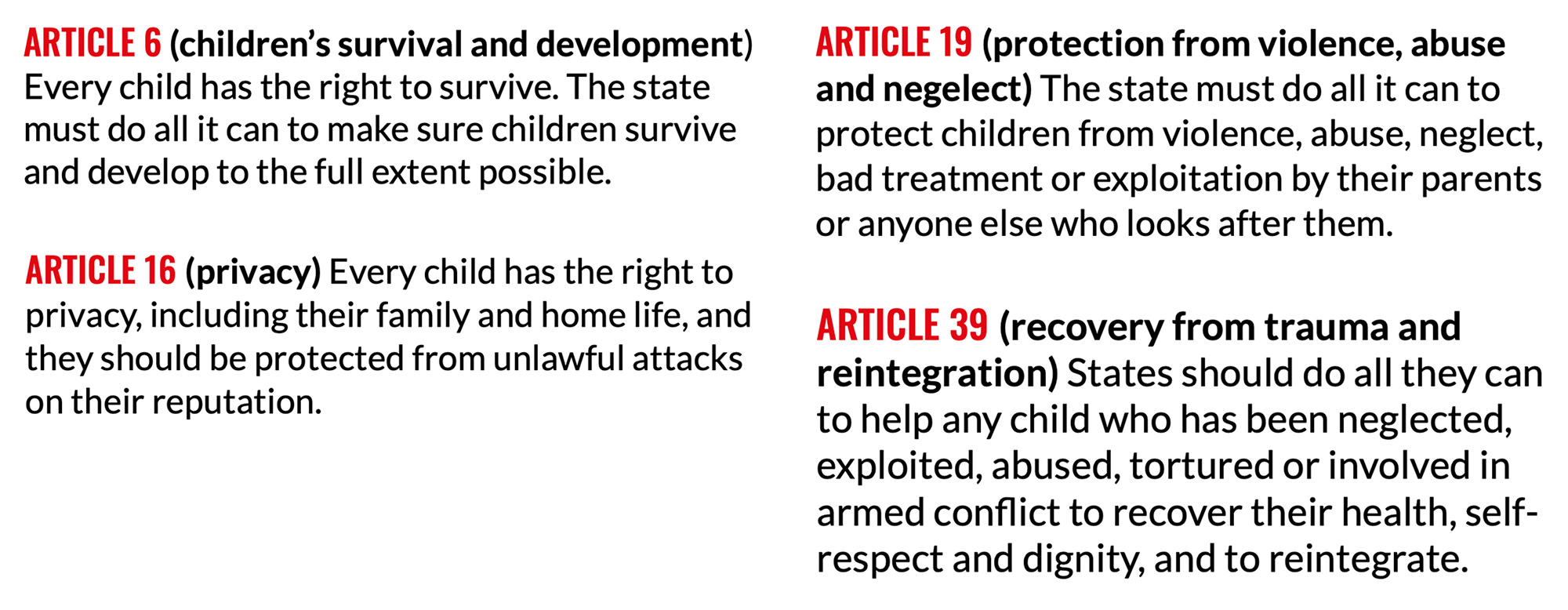

A background paper produced ahead of the event began from first principles, pointing out that CSAM breaches the Convention on the Rights of the Child, specifically the rights to human dignity, privacy and healthy development, which are documented in Articles 6, 16, 19 and 39.

From 1997 to 2021, the number of internet users globally would grow from around 70 million to over 5.3 billion (https://www.internetworldstats.com/stats.htm) In 2012, the National Center for Missing and Exploited Children (NCMEC) Cybertipline received 415,650 CSAM reports. Ten years later, the number of reports received by NCMEC had grown to 32 million.

After discussing the impact of CSAM on both the child and society at large, the paper considers current efforts to combat it, singling out Google for praise for its Hash Matching API, along with Microsoft’s PhotoDNA. But it also criticises the lacklustre approach of many states worldwide, perhaps influenced by a “Section 230” approach born in the USA which protects publishers for what users post on their platforms. NCMEC report that since 2006, 150 countries have refined or implemented anti-CSAM legislation. However, only “140 countries criminalise simple CSAM possession, and only 125 countries define CSAM,” meaning 56 and 71, respectively, do not.

The global Public Private Partnership created by UK Prime Minister, David Cameron, is praised as amongst “an impressive arrange of voluntary initiatives. It now has 101 countries signed up and 65 companies (including VerifyMy), and 87 NGOs.

But there is clearly much more to be done. The Canadian Centre for Child Protection (C3P) found a way of connecting web crawling technology with a database of fingerprints (or hashes) of classified CSAM images. Over a six-week period in 2017, Project Arachnid examined and processed 230 million web pages. It identified 5.1 million unique web pages hosting CSAM, detecting over 40,000 unique images. Nearly half (48%) of all images in relation to which Project Arachnid issued a removal notice had previously been flagged to the service provider.

“What the data clearly show is too many platforms are not utilising readily available technologies to reduce CSAM on their networks either on a meaningful scale or at all.“

And while these automated solutions can help remove already identified illegal content, the report is clear that human moderation is still required to identify it in the first place and to look at images that a similar to previously flagged CSAM but not so similar that it can be assumed they are such without a human double-checking.

The UN report concludes that it is essential not only to prioritise rescuing victims of newly created CSAM but also to take down repeat appearances of older material that is still causing harm to the children depicted in it. It reserves a specific call to action for financial actors.

While we now await a summary of the proceedings from the Chair of the Vienna expert group meeting, we know already that 71 member states of the UN committed to:

- the urgent need for action by governments, internet service providers and access providers, and other actors to protect children from online sexual exploitation and abuse and to facilitate a dialogue

- the need for common data sets, for or among competent authorities, of known child sexual abuse materials,

- the need to increase public awareness of the serious nature of child sexual exploitation and child sexual abuse materials,

As a safety tech provider, VerifyMy’s mission is to eradicate the world of child abuse material, revenge porn, and other illegal content and prevent minors from viewing or purchasing products and services that are not age appropriate.

Find out how VerifyMy can help your business with its online safety and compliance challenges.